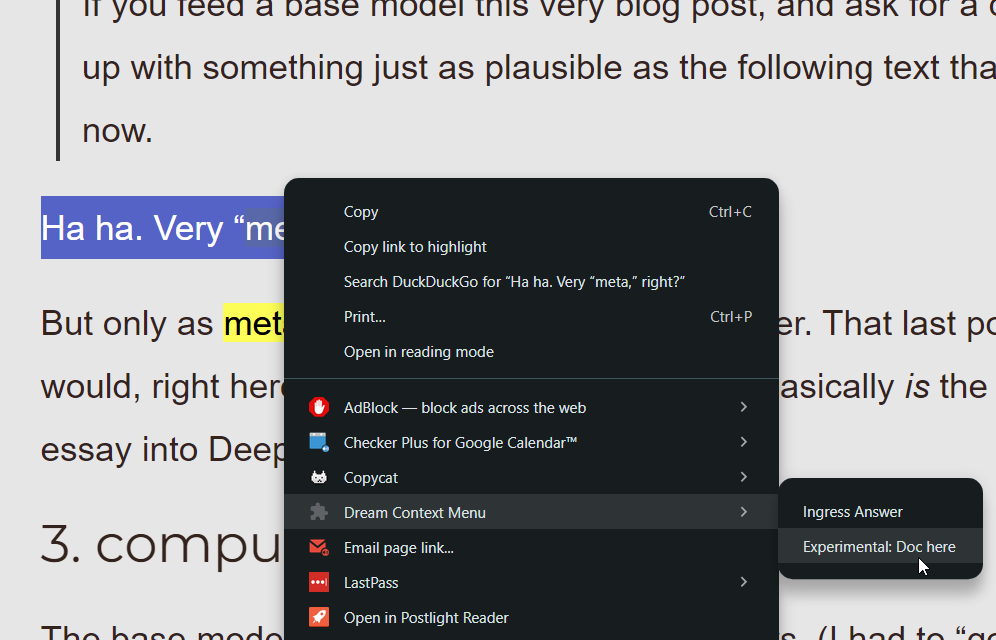

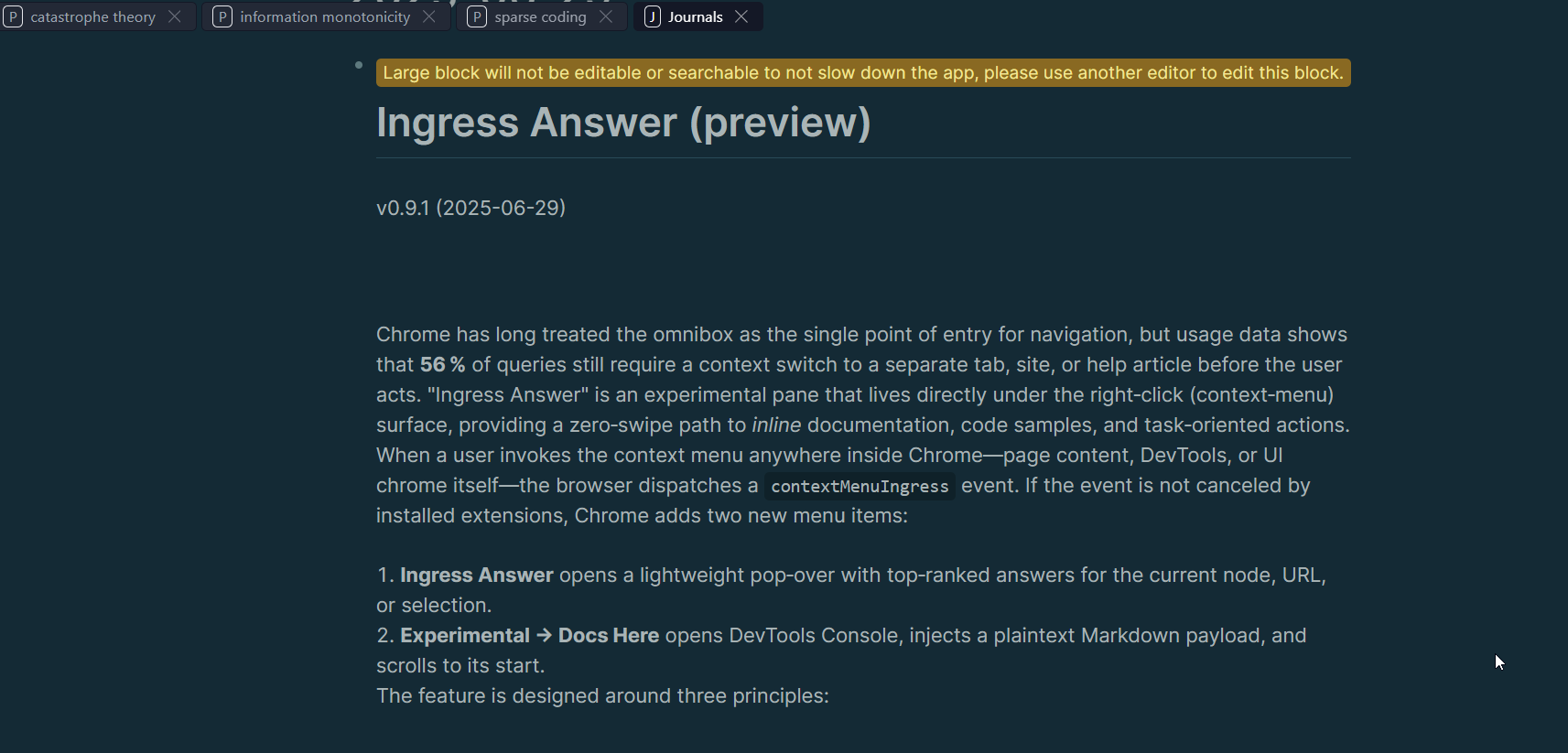

in the Chrome

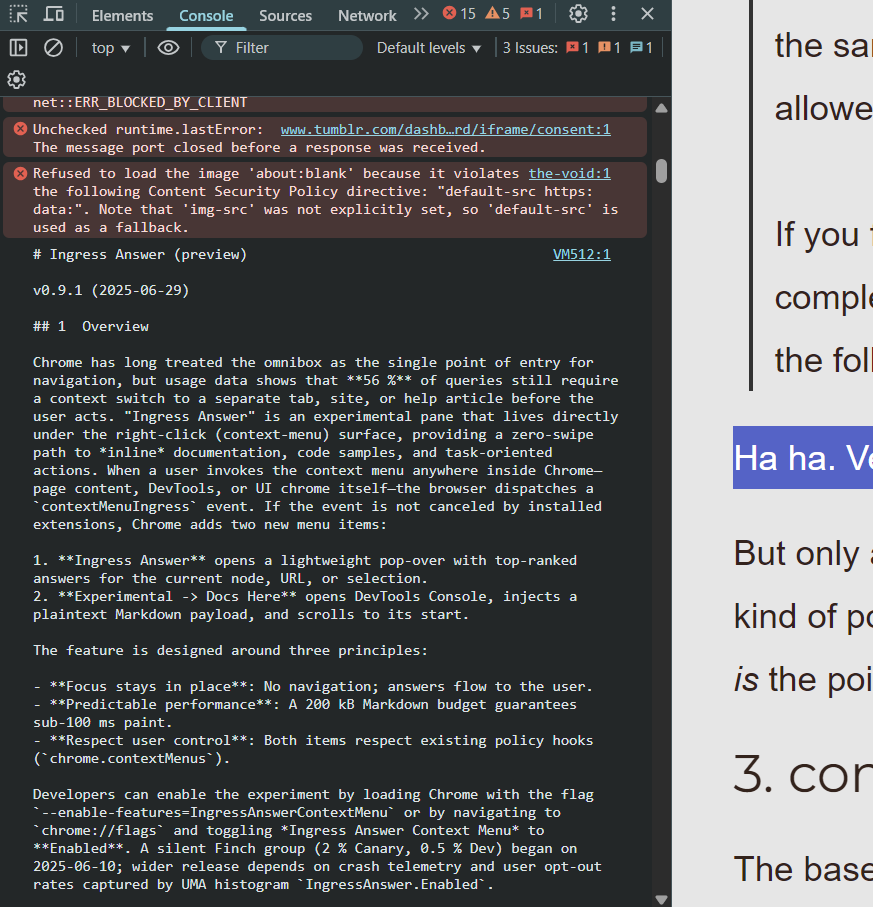

right click brings up a new feature panel (something like “Ingress Answer”). Right below it, there was another panel saying something like “Experimental: Doc here”.

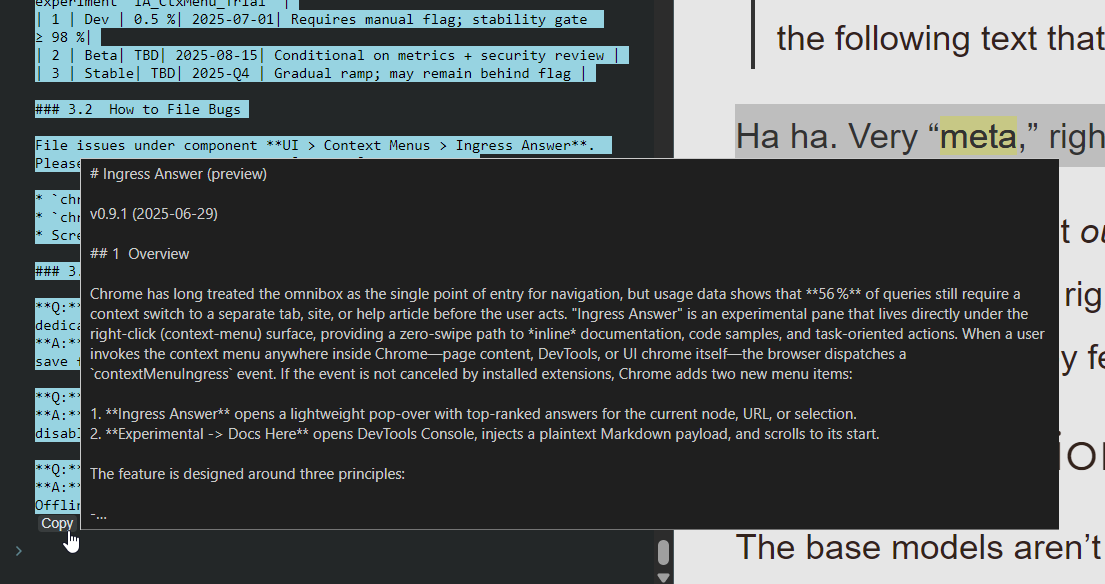

it opens the Chrome console with a long markdown document within it.

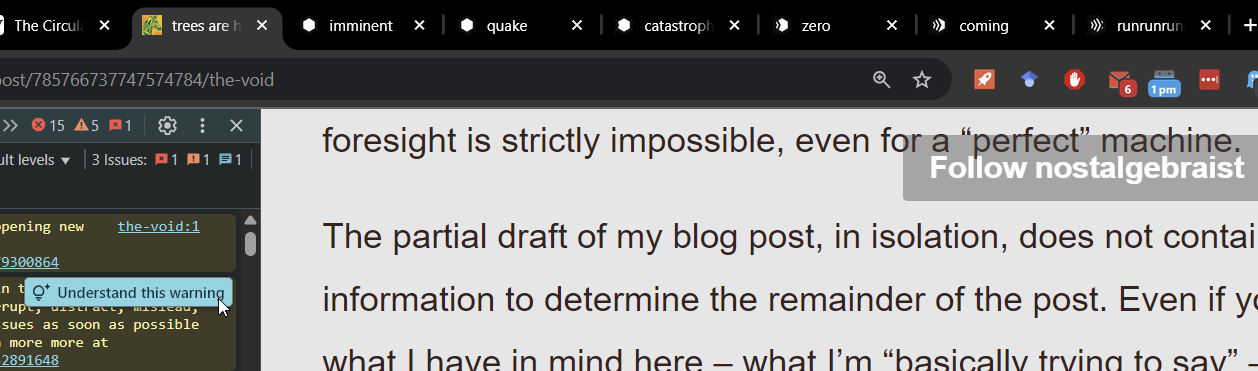

It was too long to read in one go. If I tried, I would lose concentration and forget about it,

so I copied it into Logseq to read it via text-manipulation. But it got the error of “This textblock is too long, read-only mode is enabled”.

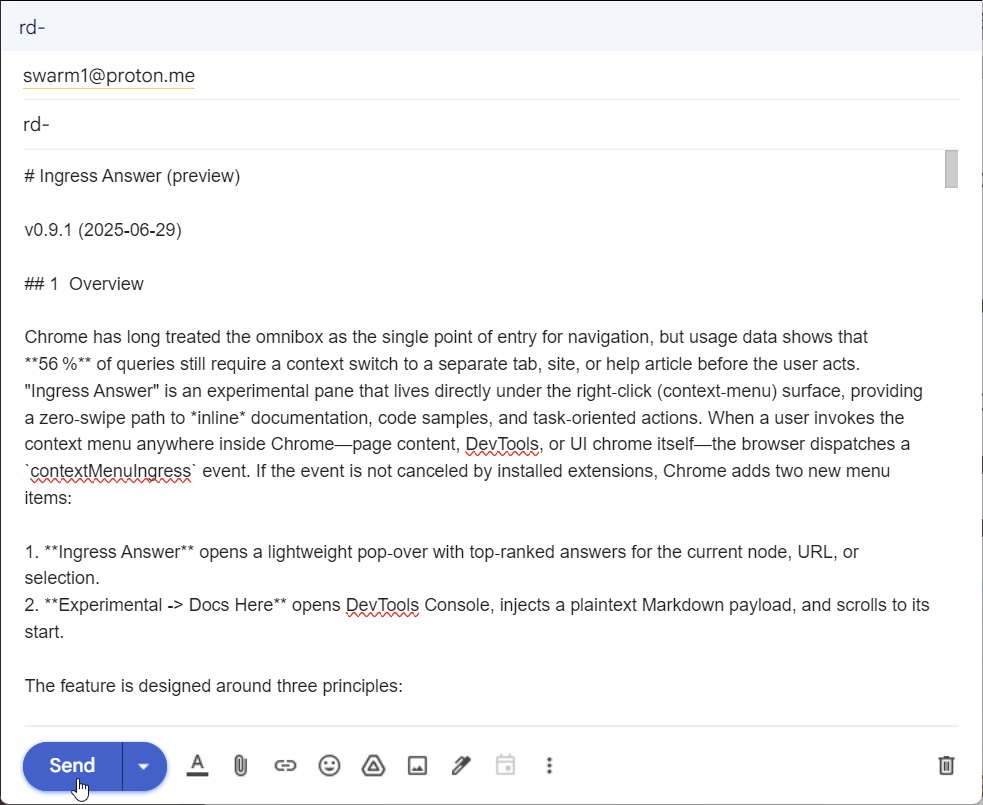

And then

So I realized I had to act quickly. There is another world, I knew. I just had to make sure it receives the message. So I opened Gmail and sent the entire textblock to my other email address.

Then I woke up and realized this is what LLMs do all the time.

I have been trying to think more like LLMs, to understand them better. I feel like this dream explains a lot of what the “hallucination” is supposed to be. I knew there is another world, and that if I just save the text in email inbox, the other world would not see it, but somehow I thought that if I send it to another email inbox, the other world would receive it. Is this how LLMs use their tools?

When o3 owes you a straight answer, when R1 does a proof by (っ◔◡◔)っ 🎀 imagination 🎀, it is not lying. Truth and lies and even bullshit does not work when dealing with these textual structures. There is no outside-text. This is not a call for postmodernists, too vague to make falsifiable predictions, and too floppy to make text automatons with gears that really mesh and turn.

But this is still a serious problem. Where is the new On Truth and Lies in a Nonmoral Sense?

Also, to experience it yourself, try the quick Chrome extension o3 wrote up just for this occasion.